This article presents a detailed examination of the collaborative integration of ABB cobot, Dingo mobile robot and Niryo NED2 arm robots within the Lucas-Nuelle mini smart factory environment. This collaboration is orchestrated by 5G Edge server. This project aims to automate the seamless transfer of final products from the smart factory to the packaging line.

Preparation and control of the product

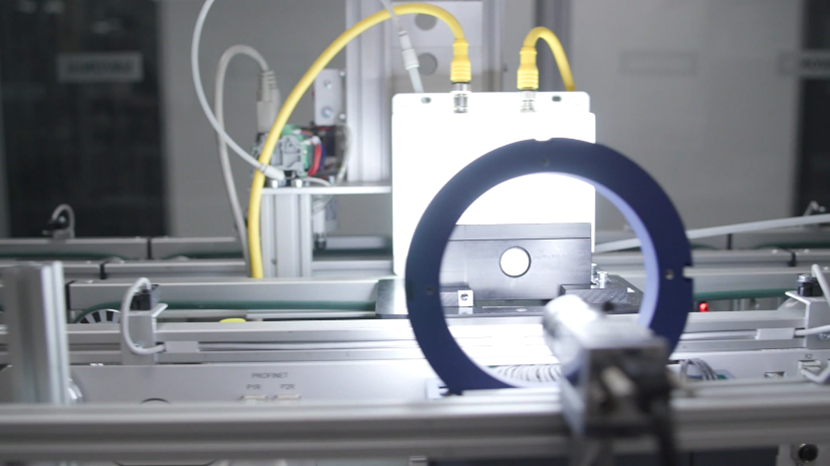

Initially, a product is ordered from the smart factory. The smart factory then manufactures the requested product. End of the manufacturing line a machine vision (mVision) system, integrated with the smart factory, examines the product. It checks whether the product meets the desired specifications and records this information. After inspection the product is waiting to be carried either to packaging line or repairment line.

The mVision system plays a key role in starting the manufacturing process. It shares critical product info, like readiness and quality, with the central server using the MQTT protocol. MQTT is a lightweight and efficient messaging protocol designed for reliable communication, especially among IoT devices, with low power consumption.

Dingo Mobile Robot and ABB Cobot Deployments

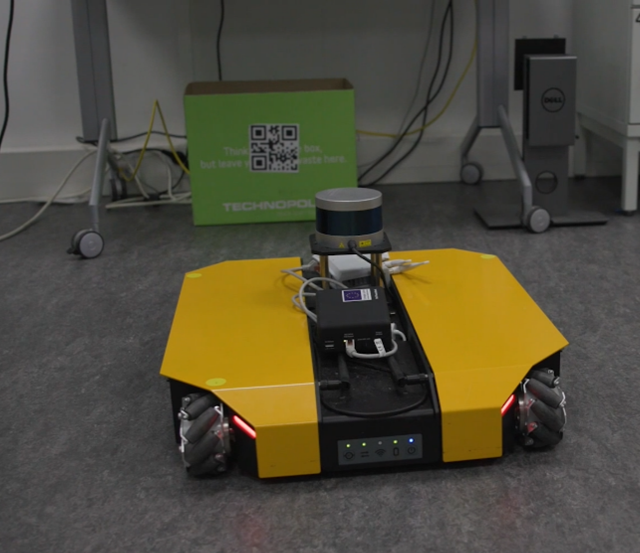

When Dingo mobile robot is ready in waiting area it communicates its status to the server through MQTT and 5G. This step is crucial for what comes next in manufacturing. The 5G Edge server receives the information from the mVision algorithm that product is ready and from Dingo that it is waiting the product and then it sends a command to the ABB cobot to pick up the ready product and put it on the Dingo.

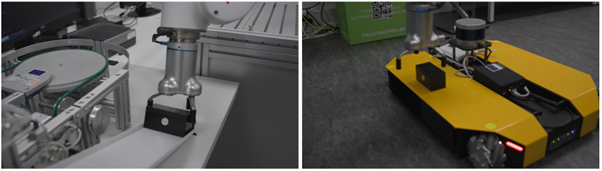

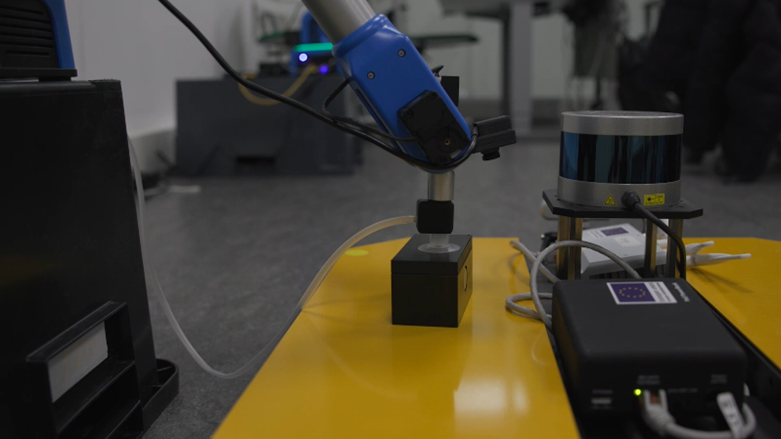

ABB cobot listens to the server via TCP socket protocol. It takes the ready product with the command coming from the server and places it on the Dingo mobile robot. Here, the ABB cobot approaches the ready product with precise positioning. Using its special gripper, it grabs the product, brings it to the Dingo and releases it.

Dynamic Movement Guided by 5G

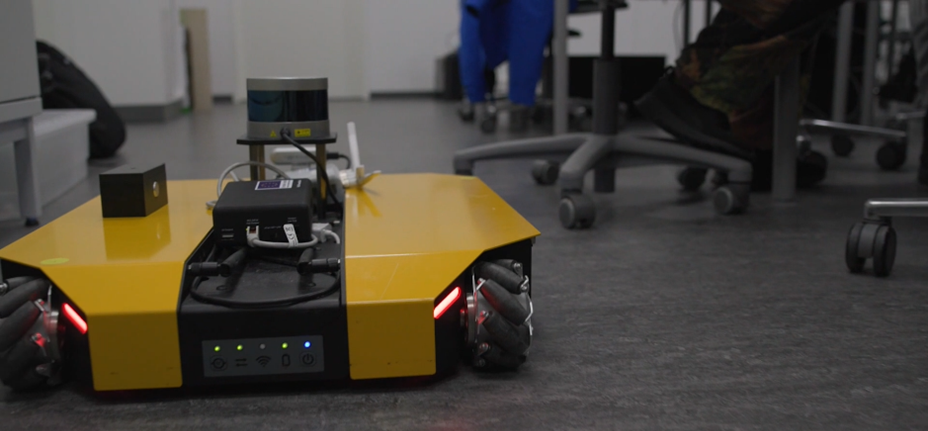

If the product is according the order, the Dingo mobile robot transports it to the first Niryo arm robot. Dingo’s movement toward Niryo is directed by server through the 5G network. Using ROS Navigation Stack ensures Dingo moves precisely while avoiding obstacles.

Niryo’s AI-Driven Control

When Dingo approaches Niryo’s workspace, the YOLO AI model detects it and Niryo takes control of Dingo. This control parks Dingo to right position. Here, Dingo and Niryo communicate with each other in real-time over 5G network.

After parking the Dingo robot, Niryo uses another YOLO AI image recognition model to find the product on the Dingo. Niryo picks it up and drops it at a certain location.

When the product is successfully taken from the Dingo, it returns to the product waiting point and informs the 5G Edge server that it is ready to carry next product. The cycle continues in this way.

If the product is not correct, the mVision algorithm informs the server about it. In this case, the process is the same, with the only difference being that Dingo takes the product to the point for faulty products. The second Niryo robot takes control of the Dingo, just like the first one, and picks up the faulty product from the Dingo.

Conclusion

In this study, a smart factory environment equipped with robots communicating via 5G was demonstrated. By assigning initial tasks to robots in the smart factory environment, they automatically perform their tasks in harmony with each other. The success of this integrated system heavily relies on real-time communication via the Savonia 5G network, which offers us fast and low-latency communications.

Watch the video by clicking here.